It is fairly universally accepted that performance in any task improves simply via practice. In contrast to other forms of training such as education, practice advocates that a skill can be mastered simply through the act of performing and rehearsing a behaviour repeatedly and has been adopted within areas such as athletic performance, martial arts and playing of musical instruments. A refinement of the concept of practice is deliberate practice: extending a focus on simply repeatedly performing a task by incorporating a cycle of rigorous assessment, feedback, and improvement.

This presents something of a quandary for militaries in ensuring their readiness and effectiveness: skills fade with non-use, but they are unable to simply engage in permanent, ongoing warfare in order to retain and further hone their skills, for fairly obvious reasons. Even in the unlikely event that it was politically acceptable to their governing regime, it would not be a sustainable approach and would directly inflict damage on the very forces it was seeking to improve: a concert pianist wouldn’t continue to improve for very long if they lost a finger during each practice session. Another approach is clearly needed. The method that is widely adopted is the use of military simulations, also known informally as war games, in which warfare can be tested and refined without the need for actual hostilities.

In wargames, two teams command opposing forces in order to provide a realistic simulation of an armed conflict. This can take the form of military field exercises in which the two teams perform mock combat actions such as friendly warships firing dummy rounds at each other or else can be purely mental activities consisting of abstract modelling “on paper”.

In a wargaming scenario, the two teams are assigned the colours “red” and “blue”. The blue team is the “friendly” team, and the red team is the “enemy”. The role of the blue team is relatively intuitive and doesn’t need much explanation: it is simply to act as the larger organisation itself would act, and using the resources it has, in order to defend itself. The red team or team red is the novel concept. It is a team that is drawn from the same cooperating militaries as the blue team but plays the role of the enemy or competitor in order to model possible enemy behaviour given their resources, situation, disposal, motivation, and attitudes, and to provide feedback from that perspective.

The term “Red Team” was first applied to indicate the opposing force in war games conducted by western nations during the Cold War and is thought to be a reference to the predominantly red flags of Communist nations such as the former USSR and PRC (People’s Republic of China), with the western nations being the Blue Team.

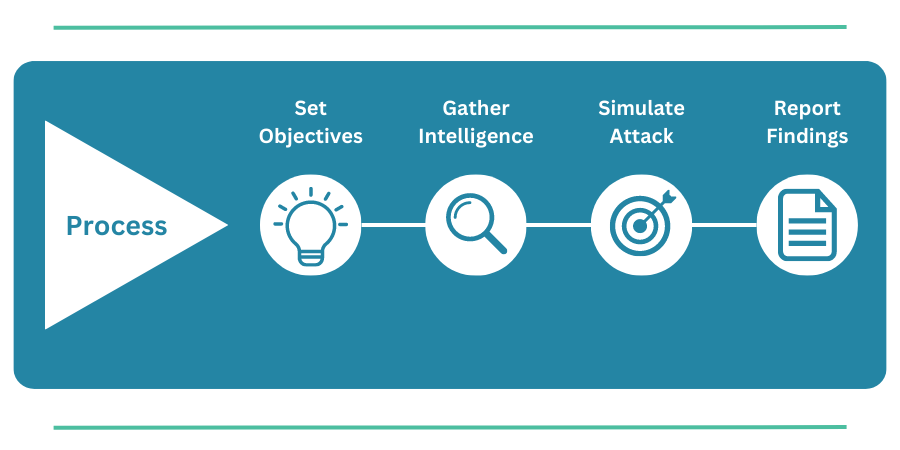

The US military adopted the term “red-teaming” to describe war-gaming activities in a structured, repeating, and iterative process that used practice to help to continuously challenge plans, operations, concepts, organizations, and capabilities by pitting them against adversaries in various scenarios. The key is that the team playing the adversary (red team) must leverage tactics, techniques, and resources as appropriate to accurately emulate the desired threat actor.

Red teams have been adopted in many fields. Within cybersecurity, the adoption has been a gradual and iterative process over a number of decades, and it is worth reviewing how that adoption occurred over time.

Original red team exercises were extremely broad in scope, involving large scale virtual deployments of the entire military forces of nations. However, in the 1980s, the concept was adapted to a more recognizable scale and purpose within the United States military. In that year, a team called “Red Cell” was formed within the US Navy’s Naval Special Warfare Development Group (NSWDG), commonly known as “SEAL Team Six”. The Red Cell (also known as OP-06D) was a special unit designed to test the security of American military installations for weaknesses by performing simulated attacks against them. Their methods were intended to reflect those a real attacker would use: they were therefore relatively controversial and unbounded, including using false IDs, dismantling protective fences, taking hostages, planting “bombs”, and even performing real kidnap of high-ranking personnel. The operations that the cell performed were recorded and subsequently used to highlight vulnerabilities that needed to be addressed.

Following this model of small-scale simulations to highlight vulnerabilities, the same approach was used (still within the US military) in security evaluations of the early Multics (“Multiplexed Information and Computing Service”) operating system, a forerunner of later UNIX systems. Seeking to ensure that the system was suitable for use to store highly secret data, the evaluation sought to uncover vulnerabilities in hardware, software and procedures used by performing tests using the same kinds of access an intruder might have.

The United States National Security Agency (NSA) formalised this approach and introduced certifications such as CNSS 4011 that covers the performance of structured, ethical hacking techniques, using aggressor teams called “red” teams and defender teams are called “blue” teams.

This concept of red teaming within a framework of ethical hacking (authorised hacking) to assess the security of computer systems was adopted outside of the US military relatively early in first-generation vulnerability scanning tools such as SATAN (“Security Administrator Tool for Analyzing Networks”) developed to test for vulnerabilities exposed across networks. These were intended to be used by teams to examine vulnerabilities in their own network and provide an alternative to purely theoretical threat modelling and the blind application of security controls without holistic, whole-environment testing. Hackers operating in an authorised manner under an ethical hacking initiative are often referred to as “white hat” (confused with all these colours yet?!) and are tasked under authorised consent with performing simulated attacks against a given system, network, or service,

The primary purpose of red teaming has traditionally been the highlighting of vulnerabilities in order to allow for their remediation, reducing the overall risk. However, red teaming can also be used to test and refine incident response processes, by seeing if logging and monitoring systems operated by blue teams are able to detect the threat and evaluating existing procedures and processes for how the blue team react in response to red team aggression.

The red team will attempt to attack systems and exfiltrate sensitive information in any way possible while the blue team monitors and responds to detected threats using intelligence gathering (OSINT), available logging and monitoring (SIEM) and existing security incident response processes (SOC).

A real security incident can paradoxically lead to improved security, since teams become more familiar with processes such as threat detection, containment, eradication, and recovery. They are also granted the opportunity to use lessons learned from the incident in order to make changes to improve the security programme by for example introducing new controls or fixing vulnerabilities. Red Teaming permits this cycle of continual improvement to be conducted without a real incident occurring.

Red teaming can be used at either the strategic or tactical levels. That is, the terms “blue team” and “red team” can be used as a noun to informally describe permanent roles that are focused on two different aspects of security; or they can be applied as a verb “red teaming” to describe a one-off or infrequent exercise that borrows team members from their typical day to day roles in order to conduct specific modelling or wargaming of a scenario in a short and time-bound test.

Everything that improves the defensive security posture of an organisation could be construed as Blue Team. There is an emphasis within “blue” teams on discovering and defending against attacks, and many smaller organisations (where they have a dedicated cybersecurity team at all) will typically build out the information security team as a “blue team” initially, even if they do not explicitly use the term or are aware of alternatives.

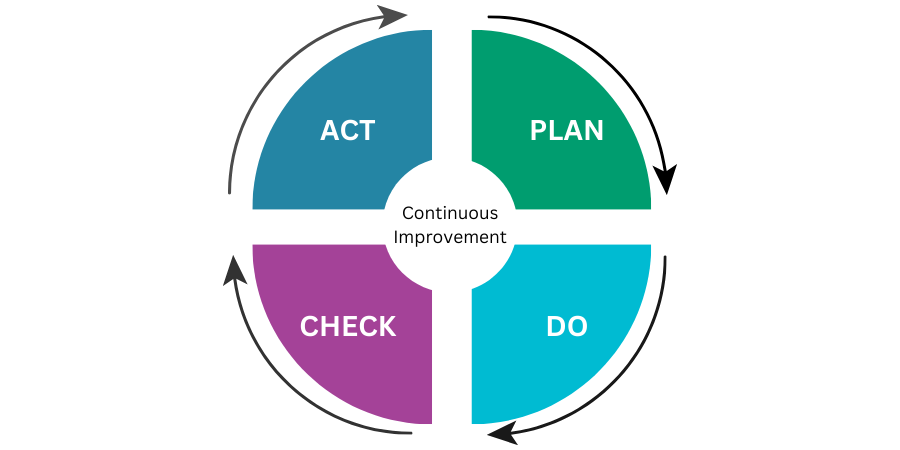

The “plan–do–check–act” (PDCA) model of management is frequently encountered in order to model a given process or function. It describes how objectives and processes for a team or function are established, conducted, checked, and then adjusted based on findings, with all four elements necessary in order to deliver continual improvement. Within this model, when organizing an information team by responsibility, it is possible to see the responsibilities “Blue Team” as the “Do” part of the cycle. Typically consisting of roles such as security architects and security administrators, blue team members with install, operate, and control security controls and protection measures in order to deliver on the security objectives set by management.

“Red team” roles tend to be slightly more specialist and applied later in the maturity and development of an organisation’s security team as it, and the parent company, begin to scale. It can include security consultants, auditors, security analysts, and penetration testers. The red team’s role is to assess and controls and process performance against the objectives of the ISMS (information security management system).

Effective security programme management involves “closing the loop” by the assessment provided by the red team, with “act/adjust” being performed to take corrective and preventative actions based on the findings by the read team. Hopefully it can be seen that without the functions of a red team being performed, an organisation’s IT department is to some extent working “blind”: controls are implemented and operated, but not assessed and no guidance is provided to direct continual improvement to security performance.

“Red teams” can also be created in an ad hoc and temporary fashion by drafting or conscripting permanent team members from other roles to take part in an attack simulation exercise known as “red teaming” or “wargaming”. The red team provides a similar function to that above, but the team mustered is a collection of employees who are seconded for a short time (possibly hours) to take part in an exercise, where their full-time job may lie in a different area.

Also known as a tiger team, the red team typically consists of a team of specialists who are assembled to perform the role of simulated attackers. The term originated within Aeronautics originally, to refer to teams selected primarily on the basis of their experience, energy, and imagination and assigned to aggressively and relentlessly track down every possible source of failure in spacecraft subsystems.

These same characteristics are found in most penetration testers: ethical hackers who are employed (and authorised) to attempt to break into a computer system. The blue team (defending team) is aware of the penetration test and is ready to mount a defense. A red team exercise or wargame will typically be more intensive than a penetration test, involving a larger team of attackers, and may additionally introduce a wider scope or reduced operating constraints, as well as an element of surprise.

Penetration testing is a form of ethical hacking that is conducted in an authorised fashion and typically contracted and scheduled. It concentrates on attacking software and computer systems by scanning ports, checking for known defects in detected protocols and services, and attempting to verify them via safe exploitation techniques. They are often bound by a very tight and formally specified scope and may relate to a single system or service.

A full-blown red teaming exercise by contrast is often much broader in participation and scope and can be “gloves off” in terms of not requiring a formal scope to be prepared in advance but granting relatively free range of action to an attacking team. Its goal is to highlight any exploitable weakness whether it be in a process or procedure, not simply discovering a technical vulnerability. Red teaming can include activities not seen in penetration tests, such as social engineering (emailing staff to try and trick them into providing passwords for example). It can also aim to highlight procedural issues, such as performing a simulated attack late at night to test if there is out of hours coverage of monitoring capabilities.

Additionally, not all red teaming necessarily involves any actual penetration testing and probing of systems at all, especially if the primary purpose is to evaluate response processes for weakness, as opposed to locating technical vulnerabilities in operated hardware and software systems.

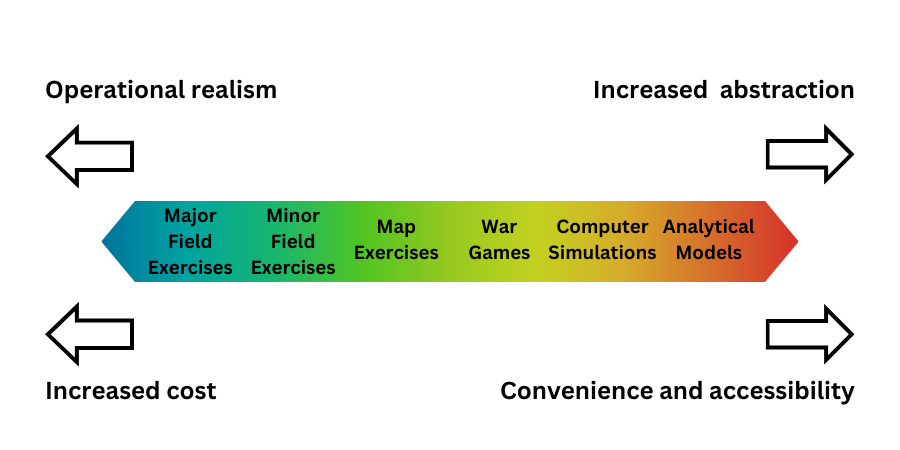

Different types of red teaming exercise may be employed, and the form that is selected depends on factors such as the resources available for the exercise (in terms of time, personnel, and finances) as well as the level of acceptable risk to operated systems: the more “real” an attack scenario is, the greater the likelihood of disruption to service. Similar to military simulations, red teaming in cybersecurity can be performed on a scale where there is a tradeoff between cost and convenience, with the most useful testing forms being the most expensive and timely to conduct:

The most basic forms of testing that can be performed for the testing of security incident response procedures as similar review of documentation, or the conducting of drills by blue teams to step through scenarios on a regular scheduled basis to instill and enforce training through repetition and practice,

The role of red teams comes into play if modelling is taken to the next level via a structured walk-through test (also known as a tabletop exercise). Whilst still theoretical and performed “on paper” with no interaction with live systems, tabletop exercise introduce a red team to propose a given attack scenario and challenge via discussion and modelling how a blue team would respond to a threat. The red team then acts as the attacker, reacting and introducing new elements to stress-test blue team response procedures.

Active testing by the red team is conducted if testing is taken one step further to perform a simulated test or functional exercise or full interruption test, conducting actual attacks within the live environment. This is the most realistic but also the highest risk approach.

Even though red teaming aims to test systems using true to life methods in order to simulate attacks, it can never be exhaustive or provide definitive assurance that all scenarios have been covered and all vulnerabilities detected. Modern systems are highly complex and have millions of discrete components, systems, modules, and code functions, which combinatorically represent an almost incalculable number of potential exploit combinations. Testing given scenarios is time consuming and can only validate a relatively small number of potential attack scenarios.

The second issue with red teaming is that despite best efforts, it can be lacking in ultimate realism, meaning that its validity may be open to question. For example, red teaming might often be performed during business hours for obvious reasons: one issue with this is that the blue team are on site and in the office – but what if the same attack would have been performed at 3am when the blue team was home and asleep: would the attack have been detected?

A third challenge is that if a red team is created ad hoc from existing staff members (primarily a functional blue team) then it may simply lack the requisite technical know-how and – as importantly – mindset of an actual hacker and hence be unable to model how a real hack may be initiated and pursued through to completion.

Finally, there may be some risk to systems from a full red teaming exercise that could potentially cause data loss or system availability outage. The risk of this occurring has to be balanced against the risk of not detecting any vulnerabilities that may be uncovered via the testing.

In recent years a “third way” has emerged between retaining a full-time role-based red team on staff and the creation of red teams ad hoc to perform a one-off red teaming exercise. This is the concept of “bug bounty programmes. The first known bug bounty program was instituted in 1983 by the creators of the Versatile Real-Time Executive (VRTX) operating system – the OS that drives the Hubble Telescope – and the term itself coined a decade later by Netscape Communications Corporation when they launched their own bug bounty programme.

Despite existing for some time, it is only in the last five years or so that bug bounty programmes have gained widespread adoption in relation to the internet-based testing of internet-facing infrastructure, in particular websites. Bug bounties operate as schemes in which third-party individuals (outside the organisation) can register and perform scoped probing of an organisation’s systems for vulnerabilities. Participants can receive recognition and compensation for reporting any security exploits and vulnerabilities that they discover.

AppCheck can help you with providing assurance in your entire organisation’s security footprint, by detecting vulnerabilities and enabling organizations to remediate them before attackers are able to exploit them. AppCheck performs comprehensive checks for a massive range of web application and infrastructure vulnerabilities – including missing security patches, exposed network services and default or insecure authentication in place in infrastructure devices.

External vulnerability scanning secures the perimeter of your network from external threats, such as cyber criminals seeking to exploit or disrupt your internet facing infrastructure. Our state-of-the-art external vulnerability scanner can assist in strengthening and bolstering your external networks, which are most-prone to attack due to their ease of access.

The AppCheck Vulnerability Analysis Engine provides detailed rationale behind each finding including a custom narrative to explain the detection methodology, verbose technical detail, and proof of concept evidence through safe exploitation.

AppCheck is a software security vendor based in the UK, offering a leading security scanning platform that automates the discovery of security flaws within organisations websites, applications, network, and cloud infrastructure. AppCheck are authorized by the Common Vulnerabilities and Exposures (CVE) Program as a CVE Numbering Authority (CNA)

As always, if you require any more information on this topic or want to see what unexpected vulnerabilities AppCheck can pick up in your website and applications then please contact us: info@localhost

No software to download or install.

Contact us or call us 0113 887 8380

AppCheck is a software security vendor based in the UK, offering a leading security scanning platform that automates the discovery of security flaws within organisations websites, applications, network and cloud infrastructure. AppCheck are authorised by the Common Vulnerabilities and Exposures (CVE) Program as a CVE Numbering Authority (CNA)