In the early days of computing, characters could be encoded using a variety of different encoding formats, with each typically only supporting a given language and set of characters (character set). Prior to the Unicode standard, text encoding from one region of the world would often be incompatible with a system in another. For example, a computer system that operates with purely English data would need to understand 128 different characters, but a system that works with Chinese data would need over 1000.

In 1987, Joe Becker of Xerox along with Lee Collins and Mark Davis from Apple, began investigating the practicalities of creating a universal character set that would be compatible across all systems, regions and languages. This research set out the foundations of the Unicode standard we use today.

Although Unicode was in part designed to solve interoperability issues, the evolution of the standard, the need to support legacy systems and different encoding methods can still pose a challenge.

Before we delve into Unicode attacks, the following are the main points to understand about Unicode:

As you can see from the points above, although Unicode succeeds at ensuring each character has its own unique numerical value, there are multiple ways in which these characters can be represented (encoded).

Unicode allows applications to represent strings in multiple forms. The adoption of Unicode across global interconnected systems introduced the need to eliminate non-essential differences between two strings which are intended to be the same but use a different Unicode form.

In simple terms, normalization ensures two strings that may use a different binary representation for their characters have the same binary value after normalization.

Unicode normalization is defined as: “When implementations keep strings in a normalized form, they can be assured that equivalent strings have a unique binary representation” – https://unicode.org/reports/tr15/

There are two overall types of equivalence between characters, “Canonical Equivalence” and “Compatibility Equivalence”.

Canonical Equivalent characters are assumed to have the same appearance and meaning when printed or displayed. Compatibility Equivalence is a weaker equivalence, in that two values may represent the same abstract character but can be displayed differently. There are 4 Normalization algorithms defined by the Unicode standard; NFC, NFD, NFKD and NFKD, each applies Canonical and Compatibility normalization techniques in a different way. You can read more on the different techniques at Unicode.org.

There are various reasons an application may normalize Unicode data, for example:

For example, consider a user named Chloé creates a user account on your web application using her name. The accented character é could be represented in a number of ways at binary level. One option would be to use Unicode literal U+00E9, another could be to use a combination of e and the acute accent character (eu0301). Although each version has the same meaning and visual appearance, they are stored as a different set of bytes by the system. This could create problems in a variety of scenarios such as when searching for the user on the system or when trying to ensure values are unique such as for a username or email address.

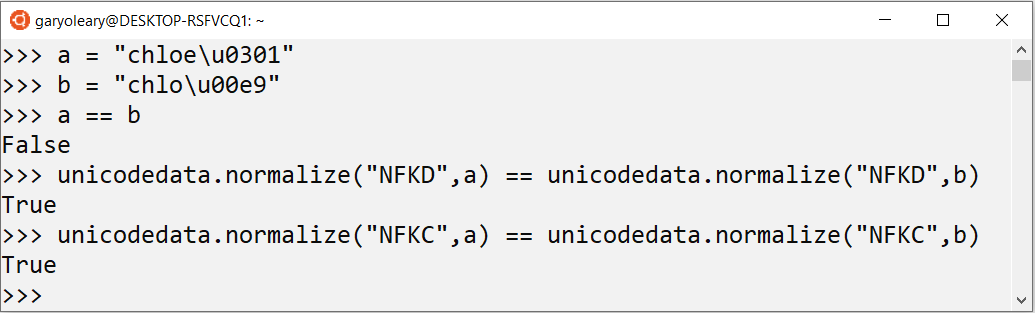

To ensure data is stored and accessed in a consistent way, Unicode normalization can be used. The screenshot below shows how Unicode normalization can be achieved within the Python programming language. The first 2 lines entered into the interpreter show the two variations of Chloé being set to the variables a and b. Next the test a == b determines if the two values are equal which returns False. The last two lines show how NFKD and NDKC normalization is used to test that the normalized versions of each string are in fact the same value.

Normalization reference table: Unicode Normalization Reference Table

Whilst normalization provides a useful tool to the developer, it can also introduce security flaws.

In the following code snippet, a query string parameter named “name_search” is read from the query string [a]. Quote characters are then stripped from the supplied value to prevent SQL Injection [b]. The value is then normalized to match normalized names that were stored in the database [c].

Submitting chloé via the name search parameter is used to build the SQL statement:

SELECT name, bio from profiles where name like '%chloé%'

<%@ Page Language="C#"%>

<%@ Import namespace="System" %>

<%@ Import namespace="System.Data" %>

<%@ Import namespace="System.Data.SqlClient" %>

<html>

<title>SQL Injection Demo</title>

<body>

<%

// [a] Read the querystring parameter "name_search"

string name_search = Request.QueryString["name_search"];

// [b] Strip quotes to prevent SQL Injection

name_search = name_search.Replace("'","");

string connection_string = System.Configuration.ConfigurationManager.ConnectionStrings["LocalSQLServer"].ConnectionString;

// [c] Normalize the name string to ensure Unicode compatibility

name_search = name_search.Normalize(NormalizationForm.FormKD);

using (SqlConnection connection = new SqlConnection(connection_string ) ) {

connection.Open();

// Search the database and return the name and bio

SqlCommand cmd = new SqlCommand("SELECT name, bio from profiles where name like '%" + name_search + "%'", connection);

SqlDataReader rdr = cmd.ExecuteReader();

while (rdr.Read()){

Response.Write("<b>Name:</b>" + rdr[0] + "</br><b>Bio:</b><br/>" + rdr[1] );

}

}

%>

</body>

</html>

The screenshots below show the same search using each variant of the accented character é, with the top window using a Latin e followed by an acute accent and the second being the literal Unicode character (U+00E9). For clarity, each variant is represented using %u encoding:

A vulnerability occurs since it is possible to insert a Unicode character which when normalized is converted to an apostrophe character which is used to delimit strings within the SQL statement. Since normalization occurs after the code to strip apostrophes, SQL injection is possible.

The Unicode Character ‘FULLWIDTH APOSTROPHE’ (U+FF07) was found to normalize to a standard Apostrophe (U+0027) when using NFKD or NFKC normalization.

Consider that the attacker wants to retrieve the username and password columns from a table named users, the following UNION SELECT statement could be injected

?name_search=chloe%uff07 UNION SELECT username, password from users --

After normalization the query is now modified to return usernames and passwords to the attacker:

SELECT name, bio from profiles where name like '%chloe' UNION SELECT username, password from users -- %'

For the purposes of clarity %u encoding is used in this blog post to encode Unicode characters. Although Microsoft IIS supports this format, %u encoding was rejected as a standard and is not universally supported. Instead, the common convention is to first encode data using UTF-8 and then % encode each byte using its hex value.

The mechanics of UTF-8 encoding is a little beyond the scope of this blog post, you can read more about UTF-8 here; https://en.wikipedia.org/wiki/UTF-8

In simple terms, Unicode characters outside of the plain text ASCII range will be represented using 2 to 4 bytes. This byte sequence includes information such as how many bytes make up the character and other information to properly decode the value.

To find the correct URL encoded value for a given Unicode character, the encodeURI javascript function can be used. For example, to encode the apostrophe character (U+FF07) used in the SQL injection example, open your browsers JavaScript console (F12) and enter the following:

The following code snippet is vulnerable to Cross-Site Scripting (XSS) due to a flawed input filter. XSS vulnerabilities occur when data submitted to the application is returned within the page without proper encoding or sanitization. The attacker can exploit XSS flaws by embedding a malicious JavaScript payload within the affected parameter. When the data is reflected back into the server’s response, the JavaScript payload is able to modify the page, perform actions on behalf of the user and access sensitive data such as cookies and local storage.

The code snippet exhibits a similar flaw to the SQL Injection example. As in the previous example, the developer has identified that certain characters need to be dealt with to prevent a security flaw. In this case the user supplied parameter “msg” is read from the query string [a] and then the < and > characters are replaced for their HTML entity equivalents < and > [b]. In some cases this would prevent the attacker being able to build a HTML tag to embed the payload:

<%@ Page Language="C#"%>

<html>

<body>

<%

// [a] Read the msg query string parameter and store it in msg variable

string msg = Request.QueryString["msg"];

// [b] Encode < and > characters to prevent XSS

msg = msg.Replace("<","<");

msg = msg.Replace(">",">");

// [c] Normalize Unicode characters

string clean_string = msg.Normalize(NormalizationForm.FormKD);

Response.Write("<b>Welcome:</b>" + clean_string +"<br/>");

%>

</body>

</html>

As in our previous example, the filter here is acting specifically on the plain text (ASCII) variants of < and > but later in the code is performing Unicode normalization [c]. We can therefore bypass this filter by submitting Unicode characters which are not matched by the filter but are normalized back to the plain text characters we need to form a malicious tag.

A review of the reference table reveals several possible variants depending on the normalization form used. For the purpose of this example, we will use ‘SMALL LESS-THAN SIGN (U+FE64)’ and ‘FULLWIDTH GREATER-THAN SIGN (U+FF1E)

In this first screenshot we have entered a <img> tag payload designed to execute the JavaScript payload alert(123) but without encoding;

<img src=a onerror="alert(123)">.

Since this code example is implemented using ASP .NET, the built-in security filter catches our attack even before reaching the validation code:

However, encoding our payload bypasses both the ASP .NET filter and our own filter:

During security testing you may wish to identify when Unicode normalization is implemented prior to applying encoding to your attack payloads. This is especially useful when the target component requires multiple steps in order to test it. For instance, AppCheck have encountered many targets where data is normalized before being written to a database but only materialises when later retrieved via another application component, in some cases only once a user had logged out and back in again (reloading data from database).

A common practice in security testing is to use “polyglot payloads”, which is an exotic term to mean a single universal payload that us used to identify multiple variants of the same flaw. A review of the Unicode Normalization reference table shows 3 characters which will decode to the same ASCII value when normalized using any of the normalization formats (NFC, NFD, NFKD and NFKC).

Of the 3 characters, the ‘KELVIN SIGN’ (U+0212A), which normalizes to an uppercase ‘K’ is perhaps the most useful since its decoded value is unlikely to be caught by any security filter (the other two normalizing to a backtick and semicolon).

Therefore, we can identify Unicode normalization via the following steps:

Note that this technique is useful for identifying components that are performing Unicode Normalization before expanding your attacks to include encoding techniques described in this blog post. However, some components may be vulnerable but may not provide a mechanism to identify the normalization format through reflection. It is therefore recommended that Unicode encoding be used for all key vulnerabilities such as SQL injection and Cross-Site Scripting even when you cannot identify normalization ahead of time.

Invalid Unicode attacks: https://security.stackexchange.com/questions/48879/why-does-directory-traversal-attack-c0af-work

Spotify Unicode Normalization vulnerability: https://labs.spotify.com/2013/06/18/creative-usernames/

No software to download or install.

Contact us or call us 0113 887 8380

AppCheck is a software security vendor based in the UK, offering a leading security scanning platform that automates the discovery of security flaws within organisations websites, applications, network and cloud infrastructure. AppCheck are authorised by the Common Vulnerabilities and Exposures (CVE) Program as a CVE Numbering Authority (CNA)